In early 2021, state media outlets in Russia and China circulated online news detailing vaccine safety concerns. These stories cited a correlation between Western-developed vaccines and patient deaths.

The claims were false, but they had a clear objective: endorse Russian and Chinese vaccines and incite mistrust in Western vaccines and governments.

This is just one example of a top threat to modern democracies and public safety—media manipulation. According to the Oxford Internet Institute, social media manipulation campaigns increased by 150% between 2017 and 2019. Over 40% of people now believe that social media has enabled polarization and foreign political meddling. We also know that some nation-states, like Russia, budget billions of dollars for disinformation efforts annually.

Governments, tech companies, and counter-disinformation teams rely on intelligence analysts to monitor media manipulation and its impacts across the web. This process requires a unique set of assessment skills and OSINT tools that make data collection and analysis simple and efficient.

What is media manipulation and how can you spot it more effectively as an analyst?

Media manipulation: What’s at stake?

The European Parliament describes disinformation as verifiably false or misleading information that is “created, presented and disseminated for economic gain or to intentionally deceive the public, and may cause public harm.” These harms undermine:

- Public health and safety. Preliminary research suggests that media manipulation influences citizens’ intent to vaccinate or pursue risky treatments. At a large scale, this has a significant impact on transmission rates and healthcare systems.

Media manipulation also impacts public safety by co-opting social movements, shifting public opinion around global issues like climate change, and recruiting vulnerable individuals into terrorism. The European Parliament considers disinformation a human rights issue that violates privacy, democratic rights, and freedom of thought.

- Political processes. Media manipulation has the power to build mistrust between populations and their governments, disrupt democratic processes, and exacerbate geopolitical tensions. Following the 2016 US presidential election, the US Department of Justice reported that the Russian Internet Research Agency purchased over 3,500 Facebook ads supporting Trump and operated a network of fake accounts posing as American activists.

- Financial security. Media manipulation takes a financial toll, costing an estimated $78B on the global economy each year. This includes the cost of reputation management, stock market hits, and countering disinformation.

The struggle of countering media manipulation

Big tech organizations like Facebook have publicized their commitment to combating media manipulation on their networks. But documents leaked earlier in 2021 show that social media manipulation research and takedown efforts are lagging behind the spread.

According to the research organization RAND, most counter-disinformation techniques like those used by Facebook rely on a combination of human and machine analysis that leave detection gaps at scale.

If it wasn’t already hard enough to spot the troll, disinformation campaigns are highly organized, employing bot networks, deep fakes, and sophisticated AI to boost the spread and evade detection.

How to spot media manipulation: 6 guidelines

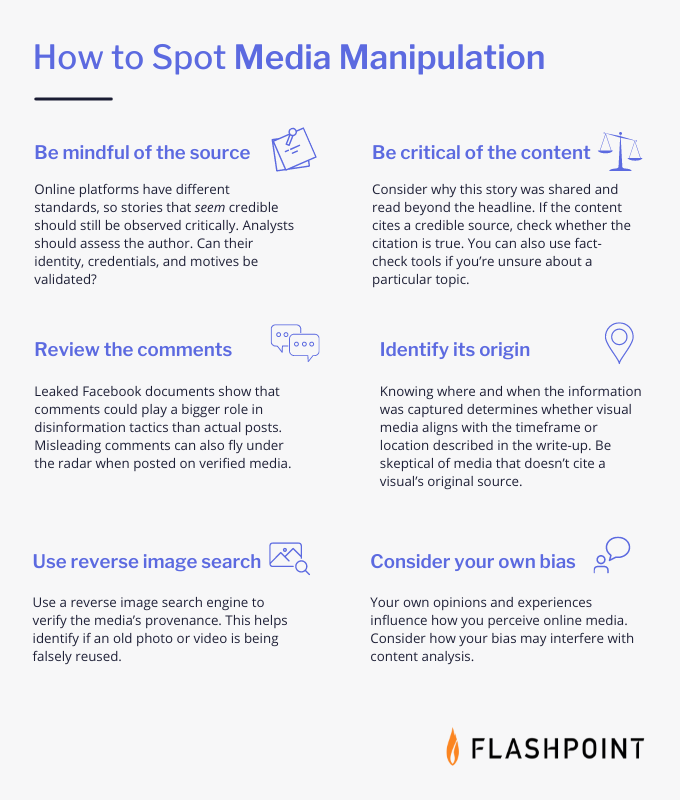

Identifying misleading content—a skill easier said than done—is crucial for countering the negative consequences of media manipulation. Here are some tips to hone your analysis:

- Be mindful of the source

Online platforms have different standards, so stories that seem credible should still be observed critically. Analysts should assess the author. Can their identity, credentials, and motives be validated? - Be critical of the content

Consider why this story was shared and read beyond the headline. If the content cites a credible source, check whether the citation is true. You can also use fact-check tools if you’re unsure about a particular topic. - Review the comments

Leaked Facebook documents show that comments could play a bigger role in disinformation tactics than actual posts. Misleading comments can also fly under the radar when posted on verified media. - Identify its origin

Knowing where and when the information was captured determines whether visual media aligns with the timeframe or location described in the write-up. Be skeptical of media that doesn’t cite a visual’s original source. - Use reverse image search

Use a reverse image search engine to verify the media’s provenance. This helps identify if an old photo or video is being falsely reused. - Consider your own bias

Your own opinions and experiences influence how you perceive online media. Consider how your bias may interfere with content analysis.

Developing critical analysis skills is only part of the story. Because media manipulation is so widespread, analysts also need advanced tools to facilitate scaled monitoring.

Open-source intelligence (OSINT) tools like Echosec help by making a variety of public sources easily searchable—not just mainstream outlets and social media sites. This includes alt-tech, fringe social media, and global sources relevant for assessing media in different regions, like Russia and China.

Facebook’s disinformation battle is proof that using AI for counter-disinformation isn’t a perfect or complete solution. But alongside human analysis, investing in OSINT tools that use natural language processing can also alleviate overwhelmed intelligence teams.

Countering the negative impacts of media manipulation starts with identifying and understanding its proliferation online. As technology struggles to keep up with manipulation tactics, analysts must develop critical assessment skills and invest in OSINT tools that support more comprehensive detection.